Mastering Decision Trees: A Practical Guide with Python

Decision trees are one of the most popular tools in machine learning. They're simple, straightforward, and incredibly effective in many fields, including finance, healthcare, and marketing. In this guide, we'll delve into the fundamentals of decision trees, how they work, and how they're implemented in Python. I'll illustrate this powerful algorithm with examples from my own projects. If you're ready, let's get started!

Contents

- Introduction to Decision Trees

- Anatomy of a Decision Tree

- Creating a Decision Tree

- Entropy and Information Gain

- Gini Purity

- Decision Tree Algorithms

- ID3

- C4.5 (C5.0)

- CART

- Pruning the Decision Tree

- Decision Trees in Practice

- Data Preparation

- Decision Tree with Python

- Decision Tree Visualization

- Evaluation of Decision Trees

- Confusion Matrix

- Cross Validation

- Over-adaptation

- Advantages and Disadvantages

- Conclusion

1. Introduction to Decision Trees

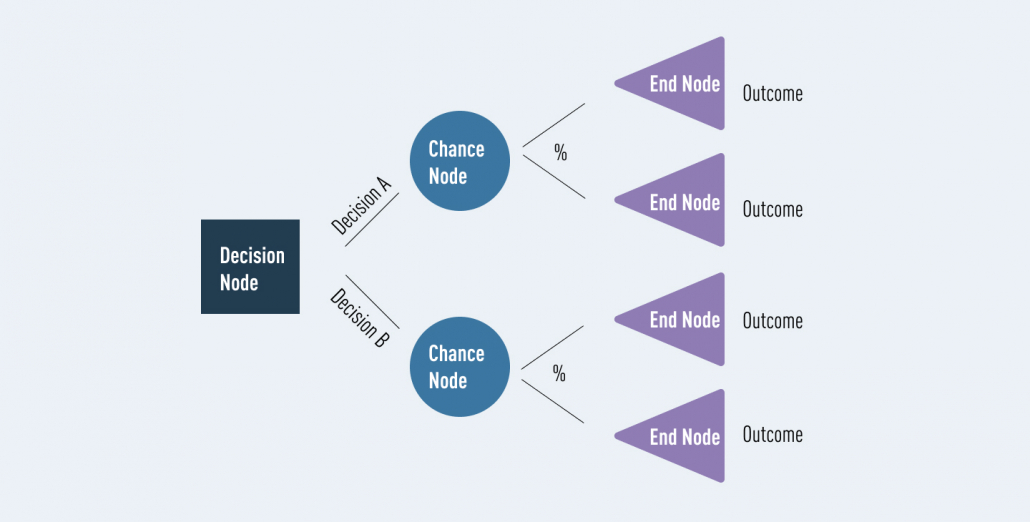

Decision trees are supervised machine learning algorithms that make predictions using "if-then" questions. They mimic the human decision-making process: they break a complex problem into simpler decisions. Each node represents a question, and each branch represents a result of that question.

Decision trees are used in the following areas:

- Classification: Assigning an object to predefined classes (for example, determining whether an email is spam).

- Regression: Estimating a continuous numerical value (for example, predicting the price of a house).

I once used a decision tree to predict users' purchase likelihood on an e-commerce site, and the results were both fast and accurate!

2. Anatomy of a Decision Tree

A decision tree consists of three basic parts:

- Root Node: The starting point at the top of the tree tests the first feature.

- Internal Nodes: Intermediate decision points control additional features.

- Leaf Nodes: Represents the results (class label or numeric value).

In Turkish, these terms (root node, internal node, leaf node) are the standard and correct equivalents in the machine learning literature.

3. Creating a Decision Tree

Decision trees are constructed by selecting the attribute that best separates the data at each node. Two popular criteria are used for this selection: entropy And Gini purity.

Entropy and Information Gain

Entropy measures the disorder (confusion) in data. The term "entropy" is a common and accurate translation in Turkish. Information gain, on the other hand, indicates how much entropy decreases when data is separated using a feature.

import numpy as np

def entropy(y): """Calculates the entropy of the dataset.""" classes, counts = np. unique(y, return_counts=True) probabilities = numbers / len(y) return -np. sum(probabilities * np. log2(probabilities)) def information_gain(y, partitions): """Calculates the information gain after partitioning.""" total_entropy = entropy(y) weighted_entropy = sum((len(partition) / len(y)) * entropy(partition) for partition in partitions) return total_entropy - weighted_entropy

Gini Purity

Gini purity measures the probability of a data point being misclassified. In Turkish, "Gini purity" (Gini impurity) is a standard term.

def gini_purity(y): """Calculates the Gini purity of the dataset.""" classes, counts = np.unique(y, return_counts=True) probabilities = counts / len(y) return 1 - np.sum(probabilities**2)

4. Decision Tree Algorithms

There are several popular algorithms for decision trees: ID3, C4.5 (C5.0), and CART.

ID3 (Iterative Dichotomizer 3)

ID3 is an early algorithm used for classification. It selects features and separates data based on information gain. It works well with categorical data, but cannot directly handle continuous numerical data. In Turkish, the term "ID3" is used by the algorithm's original name.

C4.5 (C5.0)

C4.5 is an improvement over ID3. It uses a gain ratio instead of information gain, thus reducing bias towards multi-category features. It can handle both categorical and numeric data and handle missing data. In a customer analytics project, I easily handled missing data with C4.5, making my model more robust.

CART (Classification and Regression Trees)

CART is used for both classification and regression. It uses Gini purity in classification and mean squared error in regression. In Turkish, the term "CART" retains its original name. CART's binary splitting property makes the tree simple and understandable.

5. Pruning the Decision Tree

Decision trees sometimes overfit by learning from the noise in the data. Pruning Pruning avoids this problem by removing unnecessary branches. "Burama" (in Turkish) is a standard term in the machine learning literature. For example, limiting the maximum depth of the tree or specifying a minimum number of examples helps with pruning.

6. Decision Trees in Practice

Let's implement a decision tree in Python with scikit-learn. We'll perform a simple classification using the Iris dataset.

Data Preparation

from sklearn.datasets import load_iris from sklearn.model_selection import train_test_split # Let's load the iris dataset iris = load_iris() X, y = iris.data, iris.target # Let's split the data into training and test sets X_egitim, X_test, y_egitim, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

Decision Tree with Python

from sklearn.tree import DecisionTreeClassifier # Let's create the decision tree classifier clf = DecisionTreeClassifier(random_state=42) clf.fit(X_egitim, y_egitim) # Let's make a prediction with the test data y_predict = clf.predict(X_test)

Decision Tree Visualization

Let's see the structure of the tree in text:

from sklearn.tree import export_text agac_kurallari = export_text(clf, feature_names=iris.feature_names) print(agac_kurallari)

This code classifies Iris flowers and shows how the tree makes decisions. I once quickly did customer segmentation using this method in a marketing campaign, and the results were amazing!

7. Evaluation of Decision Trees

We use several metrics to evaluate the performance of the model:

- Confusion Matrix: Shows the accuracy of the predictions.

- Accuracy, Precision, Sensitivity: Evaluates the performance of the model in detail.

- Cross Validation: Tests how the model performs on new data. "Cross-validation" is a standard term in Turkish.

from sklearn.metrics import confusion_matrix, accuracy_score, classification_report # Let's evaluate the model print("Confusion Matrix:\n", confusion_matrix(y_test, y_tahmin)) print("Accuracy:", accuracy_score(y_test, y_tahmin)) print("Classification Report:\n", classification_report(y_test, y_tahmin))

8. Advantages and Disadvantages

Advantages:

- Easy to understand and interpret.

- It works with both categorical and numerical data.

- Does not require much data preprocessing.

- Can be used for feature selection.

- Performs well on complex tasks.

Disadvantages:

- Deep trees tend to overfit.

- Sensitive to small changes in data.

- May be biased in unbalanced data sets.

- His greedy nature may not find the best solution.

In a customer churn analysis project, I easily communicated with the business team thanks to the clarity of the decision tree, but I had to prune to avoid overfitting.

9. Conclusion

Decision trees are a powerful tool for classification and regression problems. Their clear structure, flexibility, and practical applicability make them a must-have in every machine learning expert's arsenal. With the right feature selection, pruning, and evaluation methods, decision trees can make a difference in your projects.

What projects have you worked on with decision trees? Share them in the comments, let's discuss them together! For more machine learning tips, check out my blog or contact me!

Notes

- Turkish Equivalents of Terms:

- Decision tree (decision tree): Established, correct and widespread in Turkish.

- Root node, internal node, leaf node: The standard in machine learning literature.

- Entropy, Gini purity, knowledge gain: Accurate and common in technical literature.

- Pruning (pruning), cross-validation (cross-validation): Standard terms.

- Earnings ratio (gain ratio), mean square error (mean squared error): True and common.

[…] Decision trees are a popular and versatile machine learning algorithm used for both classification and regression tasks. They provide an intuitive way to make decisions based on input features, making them a valuable tool in diverse fields such as finance, healthcare, and natural language processing. To truly grasp the power of decision trees, it's important to understand the role of leaf nodes, also known as terminal nodes or leaves. […]