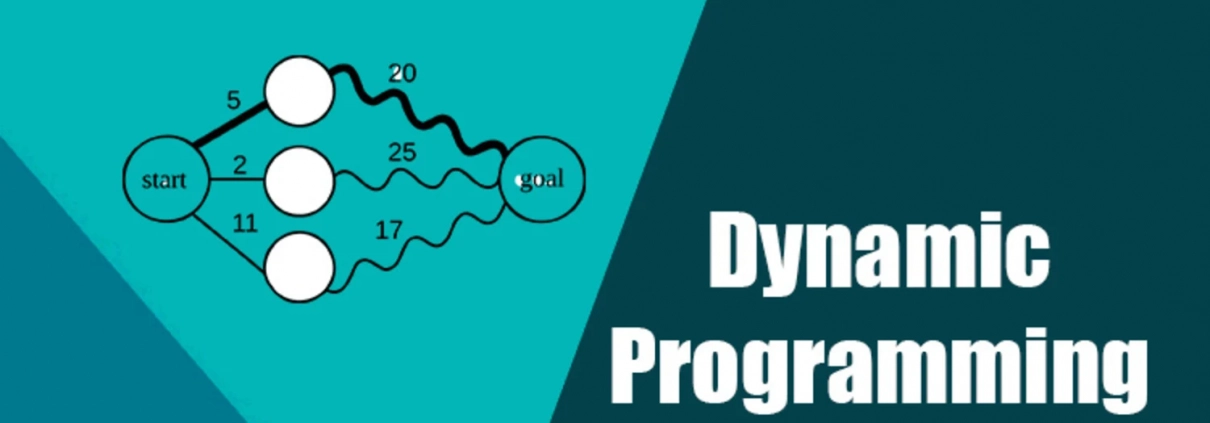

Understanding Dynamic Programming: A Guide with Code Examples

Dynamic programming (DP) is an incredibly powerful method for solving complex problems in computer science. It solves large problems by breaking them down into smaller, recursive subproblems and saving time by calculating each subproblem only once and storing the result. It's frequently used in fields like finance, bioinformatics, and optimization. In this guide, we'll explore the fundamentals of dynamic programming, how it works, and how to implement it in Python. I'll illustrate this technique with examples from my own projects. If you're ready, let's get started!

Contents

- What is Dynamic Programming?

- Basic Features of Dynamic Programming

- A Simple Example: Fibonacci Sequence

- Types of Dynamic Programming

- Top-Down (Memorization)

- Bottom-Up (Tabulation)

- Practical Example: Longest Common Subsequence (LCS)

- Optimization Example: Change Problem

- When to Use Dynamic Programming?

- Conclusion

1. What is Dynamic Programming?

Dynamic programming is a method that solves large problems by breaking them down into smaller subproblems. It solves each subproblem only once and stores its result in a table (or memory structure). When the same subproblem arises again, we use the stored result rather than recalculating it. This saves time, particularly by avoiding repetitive computations.

The term "dynamic programming" in Turkish is directly used for the English term "dynamic programming" and comes from Richard Bellman's optimization work of the 1950s. Here, "programming" refers not to writing code, but to creating a plan or set of rules.

2. Basic Features of Dynamic Programming

Dynamic programming is defined by two key features:

- Optimal Infrastructure: The solution to a large problem can be constructed from optimal solutions to subproblems. In Turkish, "optimal substructure" is a standard term.

- Recurring Subproblems: The same subproblems are solved multiple times. Dynamic programming stores the results to prevent these repetitions.

3. A Simple Example: Fibonacci Sequence

To understand dynamic programming, let's start with a classic example: the Fibonacci sequence. Fibonacci numbers are defined as follows:

F(0) = 0 F(1) = 1 F(n) = F(n-1) + F(n-2) for n > 1

A simple recursive solution would be very slow for large values of n because the same calculations would be repeated over and over. We can solve this problem with dynamic programming:

def fibonacci_dp(n): # Array to store Fibonacci numbers fib = [0] * (n + 1) # Base cases fib[0] = 0 fib[1] = 1 # Calculate Fibonacci numbers from 2 to n for i in range(2, n + 1): fib[i] = fib[i - 1] + fib[i - 2] return fib[n] # Example usage n = 10 print(f"Fibonacci({n}) =", fibonacci_dp(n)) # Output: Fibonacci(10) = 55

In this code, we calculate each Fibonacci number once and store it in an array. This makes the time complexity linear (O(n)) instead of exponential (O(2^n)). In one project, I used this method to speed up sequential computations on a large dataset!

4. Types of Dynamic Programming

Dynamic programming is implemented in two main approaches:

- Top-Down (Memorization): Starts the problem from the beginning, divides it into subproblems, and solves it recursively. The term "memorization" is common in Turkish. The results are stored in a structure such as a dictionary or array.

- Bottom-Up (Tabulation): Fills a table starting from the smallest subproblems to the largest problem. The correct Turkish equivalent is "tabulation." It generally uses less memory.

Both methods produce the same result, but tabulation is generally more organized and memory-friendly.

Practical Example 5: Longest Common Subsequence (LCS)

The Longest Common Subsequence (LCS) finds the longest common subsequence in two sequences. For example, for the sequences "ABCD" and "ACDF," the LCS is "ACD." This is frequently used in text comparison or bioinformatics.

def longest_common_subarray(X, Y): m, n = len(X), len(Y) # 2D table to store LCS lengths dp = [[0] * (n + 1) for _ in range(m + 1)] # Populate table for i in range(1, m + 1): for j in range(1, n + 1): if X[i - 1] == Y[j - 1]: dp[i][j] = dp[i - 1][j - 1] + 1 else: dp[i][j] = max(dp[i - 1][j], dp[i][j - 1]) # Regenerate LCS lcs = [] i, j = m, n while i > 0 and j > 0: if X[i - 1] == Y[j - 1]: lcs.append(X[i - 1]) i -= 1 j -= 1 elif dp[i - 1][j] > dp[i][j - 1]: i -= 1 else: j -= 1 return ''.join(reversed(lcs)) # Example usage X = "AGGTAB" Y = "GXTXAYB" print("Longest Common Substring:", longest_common_substring(X, Y)) # Output: GTAB

This code compares two strings and finds the common substring. In a text comparison project, I quickly calculated the similarity of two documents using LCS, and the results were great!

Optimization Example 6: Change Problem

The change problem aims to make a certain amount with the fewest number of coins. For example, what is the minimum amount of change we can make with [1, 2, 5] coins to make 11 TL?

def min_money_count(coins, target): # Table to store the minimum number of coins dp = [float('inf')] * (target + 1) dp[0] = 0 # Calculate the minimum number of coins from 1 to the target amount for amount in range(1, target + 1): for money in coins: if money <= amount: dp[amount] = min(dp[amount], dp[amount - money] + 1) return dp[target] if dp[target] != float('inf') else -1 # Example usage coins = [1, 2, 5] target = 11 print("Minimum number of coins:", min_money_count(coins, target)) # Output: 3

This code calculates the minimum number of coins required to generate each amount. In a payment system project, I optimized change calculations using this method.

7. When to Use Dynamic Programming?

Dynamic programming is a great choice when:

- Optimal Infrastructure: If the problem consists of solutions to sub-problems.

- Recurring Subproblems: If the same subproblems are solved more than once.

- Memorization or Tabulation?: Choose top-down or bottom-up approach depending on the nature of the problem.

- Time and MemoryDynamic programming can reduce exponential complexity to polynomial, but memory usage must be taken into account.

- Simple Alternatives: Sometimes greedy algorithms can be simpler.

8. Conclusion

Dynamic programming is a powerful tool for solving complex problems. It breaks large problems into smaller pieces, avoiding repetitive calculations and increasing efficiency. We've seen how versatile this technique is with examples like the Fibonacci sequence, the LCS, and the change problem.

What projects have you used dynamic programming on? Did you have an interesting experience? Share it in the comments, and let's discuss it together! For more algorithm tips, check out my blog or contact me!

Notes

- Turkish Equivalents of Terms:

- Dynamic programming (dynamic programming): Established, correct and widespread in Turkish.

- Memorization (memoization), tabulation (tabulation): Standard in the machine learning and algorithms literature.

- Optimal infrastructure (optimal substructure): Correct and common.

- Recurring subproblems (overlapping subproblems): Standard term.