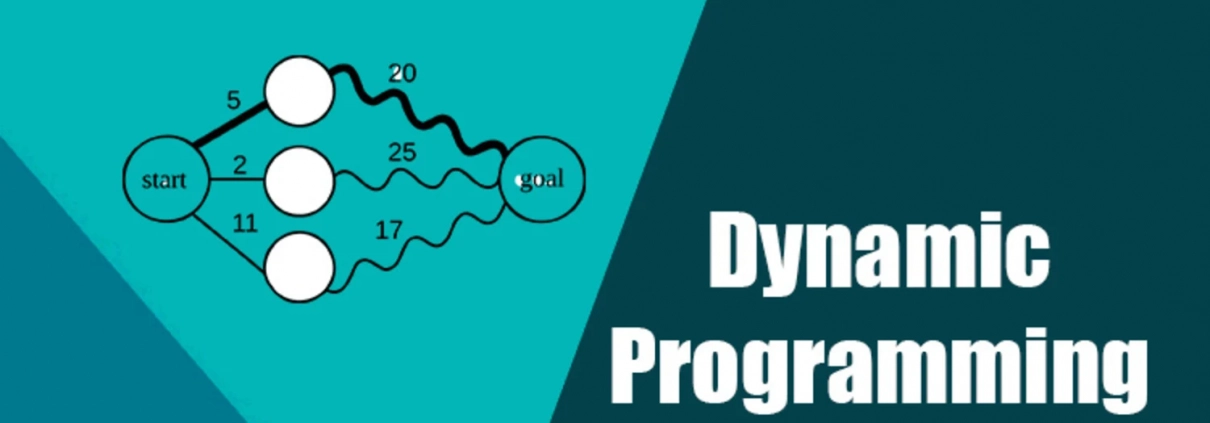

Dinamik Programlamayı Anlama: Kod Örnekleriyle Bir Kılavuz

Dinamik programlama (DP), bilgisayar bilimlerinde karmaşık problemleri çözmek için inanılmaz güçlü bir yöntem. Büyük problemleri küçük, tekrar eden alt problemlere bölerek çözüyor ve her alt problemi sadece bir kez hesaplayıp sonucunu saklayarak zaman kazandırıyor. Finans, biyoinformatik ya da optimizasyon gibi alanlarda sıkça kullanılıyor. Bu rehberde, dinamik programlamanın temellerini, nasıl çalıştığını ve Python ile nasıl uygulandığını keşfedeceğiz. Kendi projelerimden örneklerle, bu tekniği sizin için anlaşılır hale getireceğim. Hazırsanız, başlayalım!

İçindekiler

- Dinamik Programlama Nedir?

- Dinamik Programlamanın Temel Özellikleri

- Basit Bir Örnek: Fibonacci Dizisi

- Dinamik Programlama Türleri

- Yukarıdan Aşağıya (Memorizasyon)

- Aşağıdan Yukarıya (Tablolama)

- Pratik Örnek: En Uzun Ortak Alt Dizi (LCS)

- Optimizasyon Örneği: Para Üstü Problemi

- Dinamik Programlamayı Ne Zaman Kullanmalı?

- Sonuç

1. Dinamik Programlama Nedir?

Dinamik programlama, büyük problemleri küçük alt problemlere bölerek çözen bir yöntemdir. Her alt problemi sadece bir kez çözer ve sonucunu bir tabloda (veya hafıza yapısında) saklar. Aynı alt problem tekrar karşımıza çıktığında, yeniden hesaplamak yerine saklanan sonucu kullanırız. Bu, özellikle tekrar eden hesaplamaları önleyerek zaman tasarrufu sağlar.

Türkçe’de “dinamik programlama” terimi, İngilizce’deki “dynamic programming” için doğrudan kullanılır ve Richard Bellman’ın 1950’lerdeki optimizasyon çalışmalarından gelir. Burada “programlama”, kod yazmaktan değil, bir plan ya da kural seti oluşturmaktan bahsediyor.

2. Dinamik Programlamanın Temel Özellikleri

Dinamik programlama, şu iki temel özellikle tanımlanır:

- Optimal Alt Yapı: Büyük bir problemin çözümü, alt problemlerin optimal çözümlerinden oluşturulabilir. Türkçe’de “optimal alt yapı” (optimal substructure) standart bir terim.

- Tekrar Eden Alt Problemler: Aynı alt problemler birden fazla kez çözülür. Dinamik programlama, bu tekrarları önlemek için sonuçları saklar.

3. Basit Bir Örnek: Fibonacci Dizisi

Dinamik programlamayı anlamak için klasik bir örnekle başlayalım: Fibonacci dizisi. Fibonacci sayıları şöyle tanımlanır:

F(0) = 0 F(1) = 1 F(n) = F(n-1) + F(n-2) için n > 1

Basit bir rekürsif çözüm, büyük n değerlerinde çok yavaş olur çünkü aynı hesaplamalar tekrar tekrar yapılır. Dinamik programlama ile bu sorunu çözebiliriz:

def fibonacci_dp(n):

# Fibonacci sayılarını saklamak için dizi

fib = [0] * (n + 1)

# Temel durumlar

fib[0] = 0

fib[1] = 1

# 2’den n’e kadar Fibonacci sayılarını hesapla

for i in range(2, n + 1):

fib[i] = fib[i - 1] + fib[i - 2]

return fib[n]

# Örnek kullanım

n = 10

print(f"Fibonacci({n}) =", fibonacci_dp(n)) # Çıktı: Fibonacci(10) = 55

Bu kodda, her Fibonacci sayısını bir kez hesaplıyor ve bir dizide saklıyoruz. Böylece, zaman karmaşıklığı üssel (O(2^n)) yerine doğrusal (O(n)) hale geliyor. Bir projemde, bu yöntemi kullanarak büyük bir veri setinde sıralı hesaplamaları hızlandırdım!

4. Dinamik Programlama Türleri

Dinamik programlama iki ana yaklaşımla uygulanır:

- Yukarıdan Aşağıya (Memorizasyon): Probleme en baştan başlar, alt problemlere bölerek rekürsif bir şekilde çözer. Türkçe’de “memorizasyon” (memoization) terimi yaygın. Sonuçlar, bir sözlük veya dizi gibi bir yapıda saklanır.

- Aşağıdan Yukarıya (Tablolama): En küçük alt problemlerden başlayarak, büyük probleme doğru bir tablo doldurur. Türkçe’de “tablolama” (tabulation) doğru bir karşılık. Genellikle daha az bellek kullanır.

Her iki yöntem de aynı sonucu verir, ama tablolama genellikle daha düzenli ve bellek dostudur.

5. Pratik Örnek: En Uzun Ortak Alt Dizi (LCS)

En Uzun Ortak Alt Dizi (LCS), iki dizideki en uzun ortak alt diziyi bulur. Örneğin, “ABCD” ve “ACDF” dizileri için LCS, “ACD”dir. Bu, metin karşılaştırma veya biyoinformatikte sıkça kullanılır.

def en_uzun_ortak_alt_dizi(X, Y):

m, n = len(X), len(Y)

# LCS uzunluklarını saklamak için 2D tablo

dp = [[0] * (n + 1) for _ in range(m + 1)]

# Tabloyu doldur

for i in range(1, m + 1):

for j in range(1, n + 1):

if X[i - 1] == Y[j - 1]:

dp[i][j] = dp[i - 1][j - 1] + 1

else:

dp[i][j] = max(dp[i - 1][j], dp[i][j - 1])

# LCS’yi yeniden oluştur

lcs = []

i, j = m, n

while i > 0 and j > 0:

if X[i - 1] == Y[j - 1]:

lcs.append(X[i - 1])

i -= 1

j -= 1

elif dp[i - 1][j] > dp[i][j - 1]:

i -= 1

else:

j -= 1

return ''.join(reversed(lcs))

# Örnek kullanım

X = "AGGTAB"

Y = "GXTXAYB"

print("En Uzun Ortak Alt Dizi:", en_uzun_ortak_alt_dizi(X, Y)) # Çıktı: GTAB

Bu kod, iki diziyi karşılaştırır ve ortak alt diziyi bulur. Bir metin karşılaştırma projesinde, LCS’yi kullanarak iki belgenin benzerliğini hızlıca hesapladım ve sonuçlar harikaydı!

6. Optimizasyon Örneği: Para Üstü Problemi

Para üstü problemi, belirli bir miktarı en az sayıda madeni parayla oluşturmayı hedefler. Örneğin, [1, 2, 5] madeni paralarla 11 TL’yi en az kaç parayla yaparız?

def min_para_sayisi(paralar, hedef):

# Minimum para sayılarını saklamak için tablo

dp = [float('inf')] * (hedef + 1)

dp[0] = 0

# 1’den hedef miktara kadar minimum paraları hesapla

for miktar in range(1, hedef + 1):

for para in paralar:

if para <= miktar:

dp[miktar] = min(dp[miktar], dp[miktar - para] + 1)

return dp[hedef] if dp[hedef] != float('inf') else -1

# Örnek kullanım

paralar = [1, 2, 5]

hedef = 11

print("Minimum para sayısı:", min_para_sayisi(paralar, hedef)) # Çıktı: 3

Bu kod, her miktarı oluşturmak için gereken minimum para sayısını hesaplar. Bir ödeme sistemi projesinde, bu yöntemi kullanarak para üstü hesaplamalarını optimize ettim.

7. Dinamik Programlamayı Ne Zaman Kullanmalı?

Dinamik programlama, şu durumlarda harika bir seçimdir:

- Optimal Alt Yapı: Problem, alt problemlerin çözümlerinden oluşuyorsa.

- Tekrar Eden Alt Problemler: Aynı alt problemler birden fazla kez çözülüyorsa.

- Memorizasyon mu Tablolama mı?: Problemin yapısına göre yukarıdan aşağıya veya aşağıdan yukarıya yaklaşımı seçin.

- Zaman ve Bellek: Dinamik programlama, üssel karmaşıklığı polinoma indirebilir, ancak bellek kullanımı dikkate alınmalı.

- Basit Alternatifler: Bazen açgözlü algoritmalar daha basit olabilir.

8. Sonuç

Dinamik programlama, karmaşık problemleri çözmek için güçlü bir araç. Büyük problemleri küçük parçalara böler, tekrar eden hesaplamaları önler ve verimliliği artırır. Fibonacci dizisi, LCS ve para üstü problemi gibi örneklerle, bu tekniğin ne kadar çok yönlü olduğunu gördük.

Siz dinamik programlamayı hangi projelerde kullandınız? İlginç bir deneyim mi yaşadınız? Yorumlarda paylaşın, birlikte tartışalım! Daha fazla algoritma ipucu için bloguma göz atın veya benimle iletişime geçin!

Notlar

- Terimlerin Türkçe Karşılıkları:

- Dinamik programlama (dynamic programming): Türkçede yerleşmiş, doğru ve yaygın.

- Memorizasyon (memoization), tablolama (tabulation): Makine öğrenimi ve algoritma literatüründe standart.

- Optimal alt yapı (optimal substructure): Doğru ve yaygın.

- Tekrar eden alt problemler (overlapping subproblems): Standart terim.