Karar Ağaçlarını Ustalaşma: Python ile Pratik Rehber

1 Yorum

/

Karar ağaçları, makine öğreniminin en sevilen araçlarından…

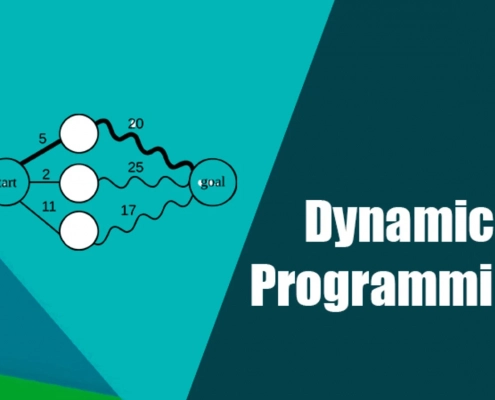

Dinamik Programlamayı Anlama: Kod Örnekleriyle Bir Kılavuz

Dinamik programlama (DP), bilgisayar bilimlerinde karmaşık…

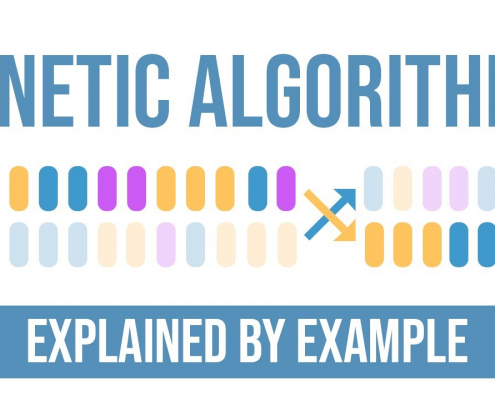

Genetik Algoritma: Mükemmel Sıralamayı Evrimleştirme

Sıralama, bilgisayar bilimlerinin temel taşlarından biri.…

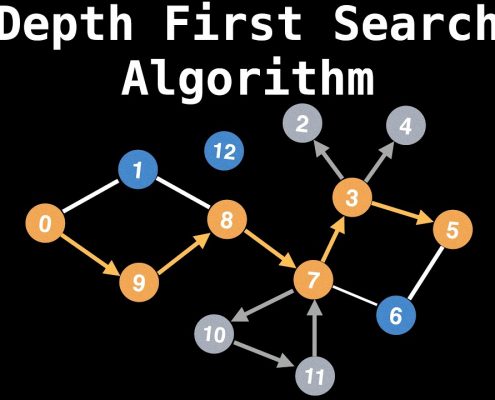

Derinlik Öncelikli Arama (DFS) Algoritması Rehberi

Graf teorisi ve algoritmalar dünyasında, Derinlik Öncelikli…

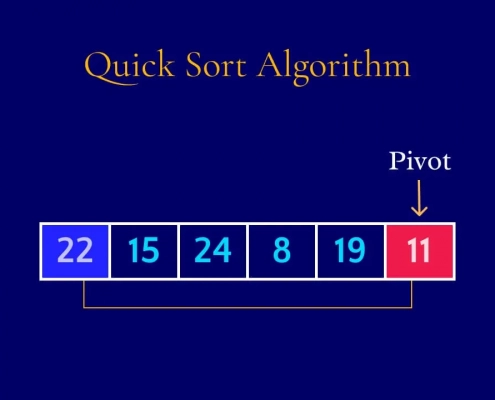

Hızlı Sıralama (Quick Sort) Rehberi: Böl ve Fethet Algoritması

Sıralama, bilgisayar bilimlerinin temel taşlarından biri.…

Birleştirme Sıralaması (Merge Sort) Rehberi: Böl ve Fethet Algoritması

Sıralama, bilgisayar bilimlerinin temel taşlarından biri.…