Karar Ağaçlarını Ustalaşma: Python ile Pratik Rehber

Karar ağaçları, makine öğreniminin en sevilen araçlarından biri. Hem basit, hem anlaşılır, hem de finans, sağlık ya da pazarlama gibi pek çok alanda inanılmaz etkili. Bu rehberde, karar ağaçlarının temellerini, nasıl çalıştığını ve Python ile nasıl uygulandığını derinlemesine keşfedeceğiz. Kendi projelerimden örneklerle, bu güçlü algoritmayı sizin için anlaşılır hale getireceğim. Hazırsanız, başlayalım!

İçindekiler

- Karar Ağaçlarına Giriş

- Karar Ağacının Anatomisi

- Karar Ağacı Oluşturma

- Entropi ve Bilgi Kazancı

- Gini Saflığı

- Karar Ağacı Algoritmaları

- ID3

- C4.5 (C5.0)

- CART

- Karar Ağacı Budama

- Pratikte Karar Ağaçları

- Veri Hazırlama

- Python ile Karar Ağacı

- Karar Ağacı Görselleştirme

- Karar Ağaçlarının Değerlendirilmesi

- Karışıklık Matrisi

- Çapraz Doğrulama

- Aşırı Uyum

- Avantajlar ve Dezavantajlar

- Sonuç

1. Karar Ağaçlarına Giriş

Karar ağaçları, denetimli makine öğrenimi algoritmalarıdır ve “eğer-ise” sorularıyla tahmin yapar. İnsanların karar alma sürecini taklit eder: Karmaşık bir problemi, basit kararlara böler. Her düğüm bir soruyu, her dal ise bu sorunun bir sonucunu temsil eder.

Karar ağaçları, şu alanlarda kullanılır:

- Sınıflandırma: Bir nesneyi önceden tanımlı sınıflara atama (örneğin, bir e-postanın spam olup olmadığını belirleme).

- Regresyon: Sürekli bir sayısal değeri tahmin etme (örneğin, bir evin fiyatını öngörme).

Bir keresinde, bir e-ticaret sitesinde kullanıcıların satın alma olasılığını tahmin etmek için karar ağacı kullandım ve sonuçlar hem hızlı hem de isabetliydi!

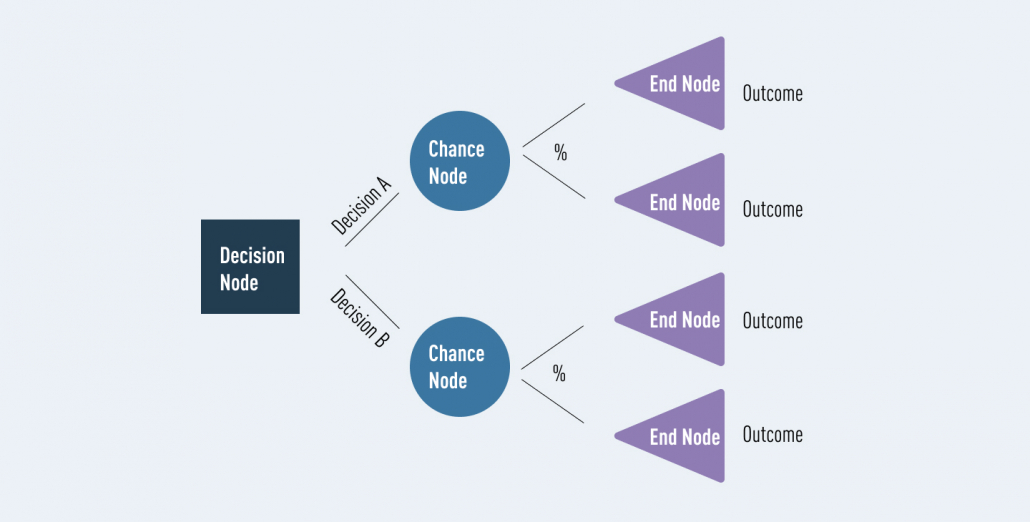

2. Karar Ağacının Anatomisi

Bir karar ağacı üç temel parçadan oluşur:

- Kök Düğüm: Ağacın tepesindeki başlangıç noktası, ilk özelliği test eder.

- İç Düğümler: Ara karar noktaları, ek özellikleri kontrol eder.

- Yaprak Düğümler: Sonuçları (sınıf etiketi veya sayısal değer) temsil eder.

Türkçe’de bu terimler (kök düğüm, iç düğüm, yaprak düğüm) makine öğrenimi literatüründe standart ve doğru karşılıklar.

3. Karar Ağacı Oluşturma

Karar ağaçları, verileri her düğümde en iyi şekilde ayıran özelliği seçerek inşa edilir. Bu seçim için iki popüler ölçüt kullanılır: entropi ve Gini saflığı.

Entropi ve Bilgi Kazancı

Entropi, verilerdeki düzensizliği (karışıklığı) ölçer. Türkçe’de “entropi” terimi yaygın ve doğru bir karşılık. Bilgi kazancı ise, bir özelliği kullanarak verileri ayırdığınızda entropinin ne kadar azaldığını gösterir.

import numpy as np

def entropi(y): """Veri setinin entropisini hesaplar.""" siniflar, sayilar = np.unique(y, return_counts=True) olasiliklar = sayilar / len(y) return -np.sum(olasiliklar * np.log2(olasiliklar)) def bilgi_kazanci(y, bolumler): """Bölünme sonrası bilgi kazancını hesaplar.""" toplam_entropi = entropi(y) agirlikli_entropi = sum((len(bolum) / len(y)) * entropi(bolum) for bolum in bolumler) return toplam_entropi - agirlikli_entropi

Gini Saflığı

Gini saflığı, bir veri noktasının yanlış sınıflandırılma olasılığını ölçer. Türkçe’de “Gini saflığı” (Gini impurity) standart bir terim.

def gini_safligi(y): """Veri setinin Gini saflığını hesaplar.""" siniflar, sayilar = np.unique(y, return_counts=True) olasiliklar = sayilar / len(y) return 1 - np.sum(olasiliklar**2)

4. Karar Ağacı Algoritmaları

Karar ağaçları için birkaç popüler algoritma var: ID3, C4.5 (C5.0) ve CART.

ID3 (Iterative Dichotomiser 3)

ID3, sınıflandırma için kullanılan erken bir algoritmadır. Bilgi kazancına dayalı olarak özellikleri seçer ve verileri ayırır. Kategorik verilerle iyi çalışır, ancak sürekli sayısal verilerle doğrudan başa çıkamaz. Türkçe’de “ID3” terimi, algoritmanın orijinal adıyla kullanılır.

C4.5 (C5.0)

C4.5, ID3’ün geliştirilmiş hali. Bilgi kazancı yerine “kazanç oranı” (gain ratio) kullanır, böylece çok kategorili özelliklere olan önyargıyı azaltır. Hem kategorik hem de sayısal verilerle çalışabilir ve eksik verileri yönetebilir. Bir müşteri analizi projesinde, C4.5 ile eksik verileri kolayca işledim ve modelimi daha sağlam hale getirdim.

CART (Classification and Regression Trees)

CART, hem sınıflandırma hem de regresyon için kullanılır. Sınıflandırmada Gini saflığını, regresyonda ise ortalama kare hatasını (mean squared error) kullanır. Türkçe’de “CART” terimi orijinal adıyla korunur. CART’ın ikili bölünme özelliği, ağacı sade ve anlaşılır kılar.

5. Karar Ağacı Budama

Karar ağaçları, bazen verilerdeki gürültüyü öğrenerek aşırı uyum (overfitting) yapar. Budama (pruning), gereksiz dalları kaldırarak bu sorunu önler. Türkçe’de “budama” makine öğrenimi literatüründe standart bir terim. Örneğin, ağacın maksimum derinliğini sınırlamak veya minimum örnek sayısını belirlemek budamaya yardımcı olur.

6. Pratikte Karar Ağaçları

Python’da scikit-learn ile karar ağacı uygulayalım. Iris veri setiyle basit bir sınıflandırma yapacağız.

Veri Hazırlama

from sklearn.datasets import load_iris from sklearn.model_selection import train_test_split # Iris veri setini yükleyelim iris = load_iris() X, y = iris.data, iris.target # Veriyi eğitim ve test setlerine bölelim X_egitim, X_test, y_egitim, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

Python ile Karar Ağacı

from sklearn.tree import DecisionTreeClassifier # Karar ağacı sınıflandırıcısını oluşturalım clf = DecisionTreeClassifier(random_state=42) clf.fit(X_egitim, y_egitim) # Test verileriyle tahmin yapalım y_tahmin = clf.predict(X_test)

Karar Ağacı Görselleştirme

Ağacın yapısını metin olarak görelim:

from sklearn.tree import export_text agac_kurallari = export_text(clf, feature_names=iris.feature_names) print(agac_kurallari)

Bu kod, Iris çiçeklerini sınıflandırır ve ağacın nasıl kararlar aldığını gösterir. Bir keresinde, bir pazarlama kampanyasında bu yöntemi kullanarak müşteri segmentasyonunu hızlıca yaptım ve sonuçlar harikaydı!

7. Karar Ağaçlarının Değerlendirilmesi

Modelin performansını değerlendirmek için birkaç ölçüt kullanıyoruz:

- Karışıklık Matrisi: Tahminlerin doğruluğunu gösterir.

- Doğruluk, Kesinlik, Duyarlılık: Modelin performansını detaylı değerlendirir.

- Çapraz Doğrulama: Modelin yeni verilerde nasıl çalıştığını test eder. Türkçe’de “çapraz doğrulama” (cross-validation) standart bir terim.

from sklearn.metrics import confusion_matrix, accuracy_score, classification_report

# Modeli değerlendirelim

print("Karışıklık Matrisi:\n", confusion_matrix(y_test, y_tahmin))

print("Doğruluk:", accuracy_score(y_test, y_tahmin))

print("Sınıflandırma Raporu:\n", classification_report(y_test, y_tahmin))

8. Avantajlar ve Dezavantajlar

Avantajlar:

- Anlaşılması ve yorumlanması kolay.

- Hem kategorik hem de sayısal verilerle çalışır.

- Fazla veri ön işleme gerektirmez.

- Özellik seçimi için kullanılabilir.

- Karmaşık görevlerde iyi performans gösterir.

Dezavantajlar:

- Derin ağaçlar aşırı uyma eğiliminde.

- Verideki küçük değişikliklere hassas.

- Dengesiz veri setlerinde önyargılı olabilir.

- Açgözlü yapısı, en iyi çözümü bulamayabilir.

Bir müşteri churn analizi projesinde, karar ağacının anlaşılırlığı sayesinde iş ekibiyle kolayca iletişim kurdum, ama aşırı uymayı önlemek için budama yapmak zorunda kaldım.

9. Sonuç

Karar ağaçları, sınıflandırma ve regresyon problemleri için güçlü bir araçtır. Anlaşılır yapıları, esneklikleri ve pratik uygulanabilirlikleriyle her makine öğrenimi uzmanının cephaneliğinde olmalı. Doğru özellik seçimi, budama ve değerlendirme yöntemleriyle, karar ağaçları projelerinizde fark yaratabilir.

Siz karar ağaçlarıyla hangi projelerde çalıştınız? Yorumlarda paylaşın, birlikte tartışalım! Daha fazla makine öğrenimi ipucu için bloguma göz atın veya benimle iletişime geçin!

Notlar

- Terimlerin Türkçe Karşılıkları:

- Karar ağacı (decision tree): Türkçede yerleşmiş, doğru ve yaygın.

- Kök düğüm, iç düğüm, yaprak düğüm: Makine öğrenimi literatüründe standart.

- Entropi, Gini saflığı, bilgi kazancı: Teknik literatürde doğru ve yaygın.

- Budama (pruning), çapraz doğrulama (cross-validation): Standart terimler.

- Kazanç oranı (gain ratio), ortalama kare hatası (mean squared error): Doğru ve yaygın.

[…] Karar ağaçları, hem sınıflandırma hem de regresyon görevleri için kullanılan popüler ve çok yönlü bir makine öğrenimi algoritmasıdır. Girdi özelliklerine dayalı kararlar almak için sezgisel bir yol sağlarlar ve bu da onları finans, sağlık ve doğal dil işleme gibi çeşitli alanlarda değerli bir araç haline getirir. Karar ağaçlarının gücünü gerçekten kavramak için, terminal düğümler veya yapraklar olarak da bilinen yaprak düğümlerinin rolünü anlamak önemlidir. […]